I recently built a structure with bamboos, inspired by the “Da Vinci bridge” (which is a specific simpler case of a reciprocal frame structure). Bamboos are neat: they grow fast, they are cheap (some people will just be happy if you cut theirs for free), they are quite strong and flexible, they’re relatively immune to rotting, though they’re organic and bio-degradable, but they are not always easy to work with when it comes to dimensioning…

Tags : #Max

Real-time video matting in Max

I was testing out some real-time video matting in Max/MSP, that we developped for an ongoing project (edit: the Cinematon is out!), and realized that “sunset.jpg” demo file was a nice match for this memorable dialogue in Romero’s “Night of the living dead”… If it weren’t for all those zombies outside, it could have been such a lovely campfire scene!

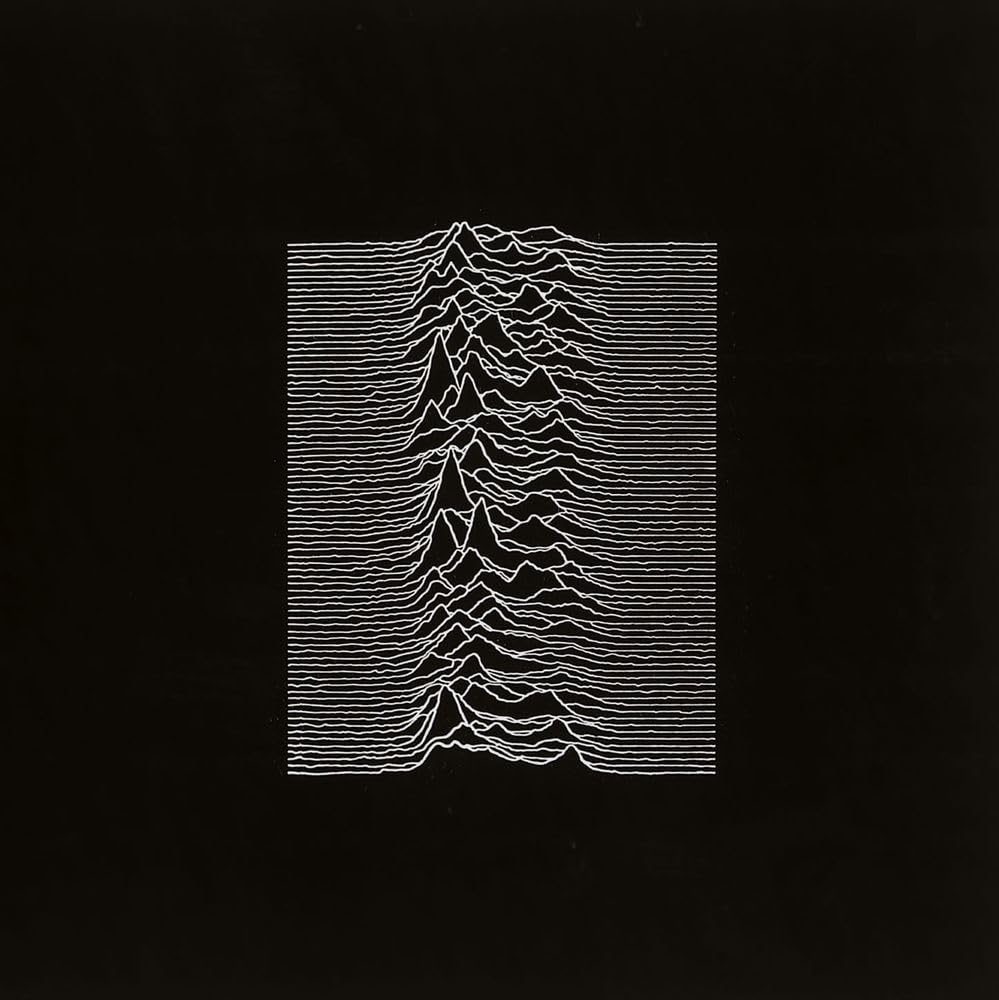

Diving into unknown pleasures

Il y a quelque chose d’intrigant dans la pochette de l’album “Unknown Pleasures” de Joy Division (1979). On attribue souvent cette image à Peter Saville, designer pour le label Factory Records à l’époque de la sortie de l’album, mais il n’a fait que mettre en blanc sur noir un dessin déjà paru dans le Scientific American, une dizaine d’années plus tôt.

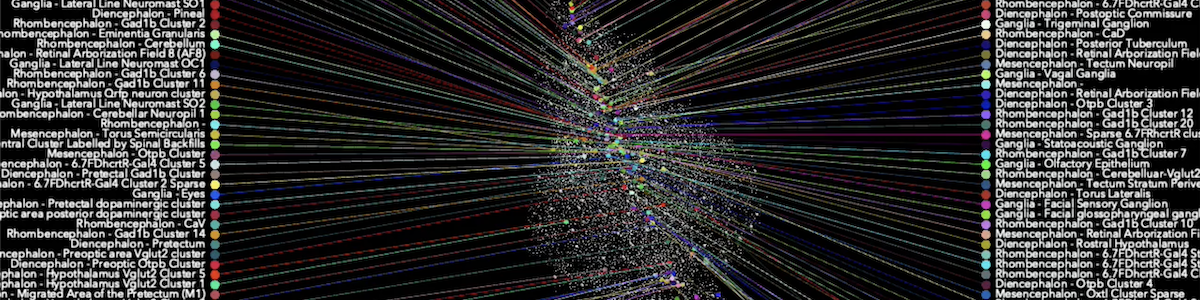

The Brain Orchestra – neural activity sonification

Neural activity data-sonification, with Sébastien Wolf (ENS Institute of Biology).

Data sonification could be an effective tool for neuroscience research, complementing data visualization. Recent advances in brain imaging have made it possible to record the activity of tens of thousands of mammalian neurons simultaneously in real time. The spatial and temporal dynamics of neuron activation can be translated into sound triggering, according to the functional groups to which these neurons belong.

We have developed a software to load such datasets as binary matrices and translate them into MIDI messages, triggering notes whose velocity is a function of neuronal activity. In order to process this vast quantity of data — several tens of thousands of neurons over several tens of thousands of samples — the software enables neurons to be associated in sub-groups, such as those proposed in common atlases, or in an arbitrary manner. The same interface can also be used to sonify continuous data sets from electroencephalography (EEG) recordings of human brain activity.

This software, developed with Max, can be used as a stand-alone program, but can also be loaded directly as a plugin into the Ableton Live digital audio workstation. This makes it easy to get to grips with the software, enabling you to test different mappings between neural activity data and musical values: which chords, which harmonic progressions, which orchestration, etc. translate the neural activity data set in the most interesting way from the point of view of their scientific understanding and/or musical aesthetics.

References

- Vincent Goudard, Sébastien Wolf. The Brain Orchestra, un outil de sonification de l’activité neuronale. Journées d’Informatique Musicale, GRAME; INRIA, Jun 2025, Lyon, France. ⟨hal-05102386v1⟩

Media

Sonification of the zebra-fish neural activity

In the two videos below, you can see and hear the brain’s activity of a larval zebrafish, which has the good idea of being transparent and filmable with suitable microscopes. Its 80,000 neurons communicate by sending small electrical impulses to each other, according to a dynamic and functional organization that neuroscience research strives to decipher.

1. sonification of the ARTR region

The activity of the region selected here, called the “Anterior Rhombencephalic Turning Region”, is correlated with the zebrafish’s swimming movements, to the left or to the right. The sonification of both hemispheres of this region (in red and blue) according to different tunings suggests the alternation of this activity, depending on the zebrafish’s turns.

2. sonification of the habenula region

The region selected here is called the “habenula”. It is a small and deeply nested region of the brain, common to all vertebrates animals, that contains a few hundreds neurons in the case of the zebra-fish. The activity of this region in that dataset shows asynchronous peaks, where groups of neurons tend to fire spikes in sequences rather than simultaneously. The resulting sonification is hence closer to a monodic melody than to a sequence of harmonic chords.

Sonification of a human brain’s EEG

This example shows the sonification of 16 EEG sensors (out of 64 in the whole dataset) placed on a human skull, while the subject was asked to performed various visual indentification tasks. The sonic result lets one hear various timescales of musical patterns, along with various harmonic combinations (and non-combinations), suggesting the synchrone activity of several regions of the brain, and the asynchrone activity of other regions. Overall, the gradual crescendos and decrescendos of the different EEG channels make for a pleasant cinematique soundtrack. Who would have known this (and more) all happens in your brain?

Fine grain sonification with granular synthesis

This video shows the neural activity of a zebra fish larvae, whose 23743 neurons have been recorded using light-sheet microscopy. This dataset is then downsampled to 8000 points, then fed to a granular synthesis engine. Grains of sound are triggered according to the activity of this subset of neurons. The pitch of the sound is mapped according to the position of the neurons along the spinal cord axis, and spread on four octaves of a diatonic scale. This mapping allows to highlight the spatial propagation of the neural impulses (also called “spikes”) throughout the brain, resulting in rapid audible glissandi/arpeggi, without occasional “epileptic” clusters of notes. Each grain of sound is then spatialized in a stereo output according to their 3D position.

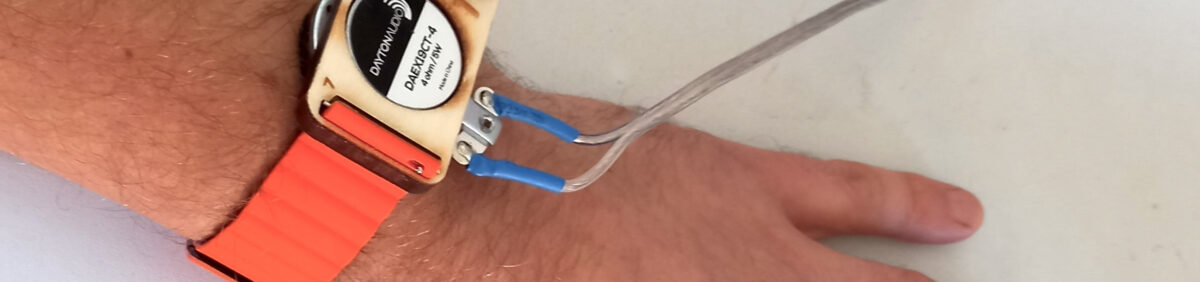

Staccato – Musical sound streams vibrification

Vibrotactile transformation of musical audio streams

Vibrification, or the transformation of audio streams into tactile vibration streams, involves the development of transformation algorithms, in order to translate perceptible cues in the audible domain into vibratory cues in the tactile domain. Although auditory and tactile perceptions have similarities, and in particular share part of their sensitive frequency space, they differ in many respects. Consequently, the process of vibrating an audio signal requires a set of strategies for selecting the elements to be translated into vibrations. To this end, as part of the “Staccato” project, a set of free and open-source tools in the Max software have been developed:

- a framework based on the “Model-View-Controller” (MVC) pattern to facilitate settings and experimentation

- a set of algorithms, enabling adaptation to different types of vibrotactile transducers and leaving the choice between various vibrification strategies, adaptable according to the content of the audio signal and the user’s preferences.

This set of tools aims at easing the exploration of the vibrotactile modality for musical sound diffusion, supported by a recent boom in technical devices enabling its implementation.

The Staccato project was funded by the french National Research Agency (ANR-19-CE38-0008) and coordinated by Hugues Genevois from the Luthery-Acoustics-Music team at ∂’Alembert Institute- Sorbonne University.

Code is available on GitHub.

Reference

Vincent Goudard, Hugues Genevois. Transformation vibrotactile de signaux musicaux. Journées d’informatique musicale, Laboratoire PRISM; Association Francophone d’Informatique Musicale, May 2024, Marseille, France. [online]

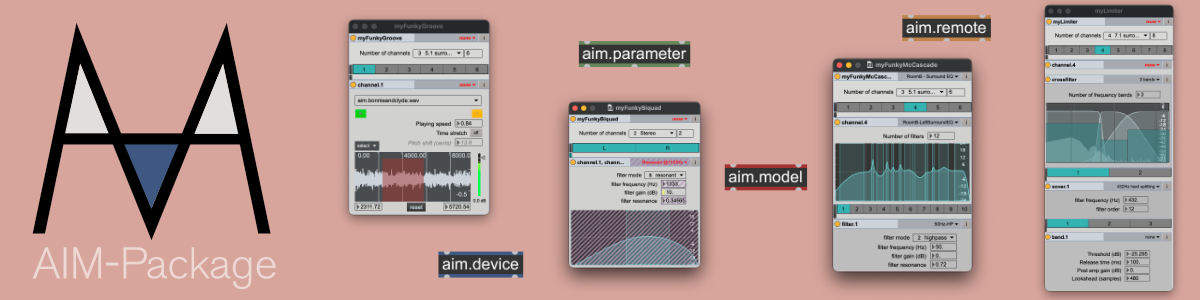

AIM-Framework

Designing a complete in-car audio experience requires rapid prototyping solutions in a complex audio configuration, bringing together different areas of expertise ranging from sound-design and composition, down to hardware protection, with every conceivable layer of audio engineering in-between, up to A-B comparisons setups for end-users perception evaluation in real demonstration vehicules.

The AIM project started as a request from the Active Sound eXperience team at Volvo Cars Company to meet such goals.To this end, it was decided to develop a framework on top of Max/MSP, so that dedicated audio processing modules could be easily created, with the ability to store presets for various configurations, and to take advantage of Max’s modular design to distribute the complexity of audio engineering among the various expert teams involved in the project.

The core part of the package (building blocks) was presented at the Sound and Music Conference (SMC’22) organized by GRAME in Saint Etienne, France.

Summary: https://zenodo.org/record/6800815

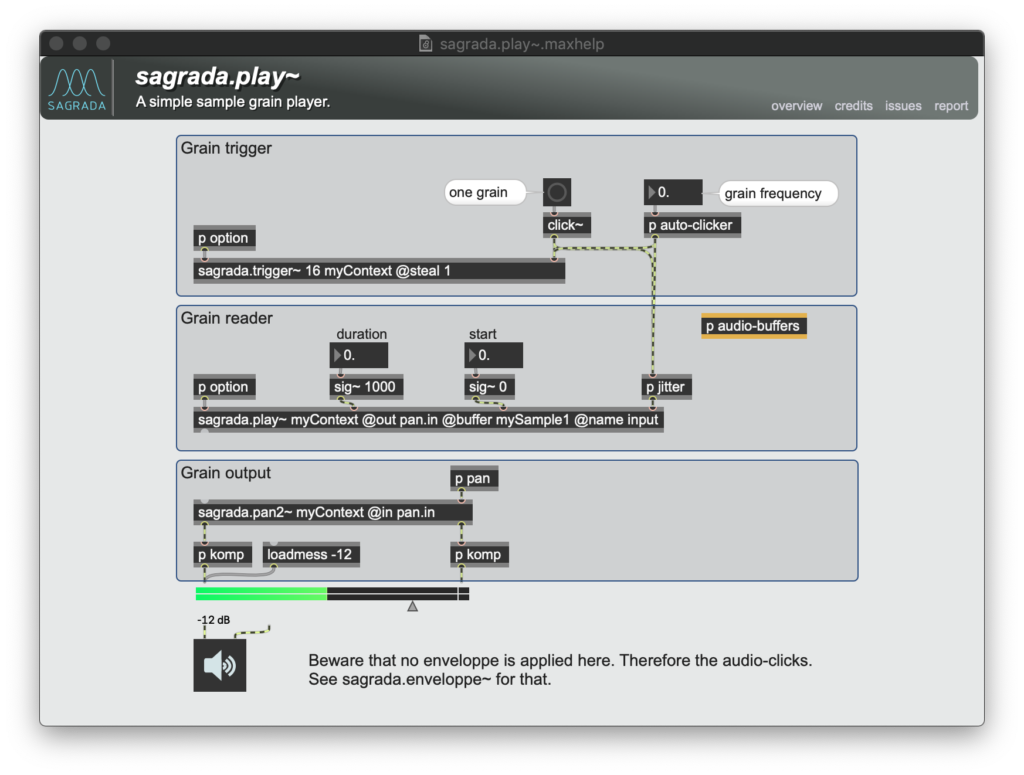

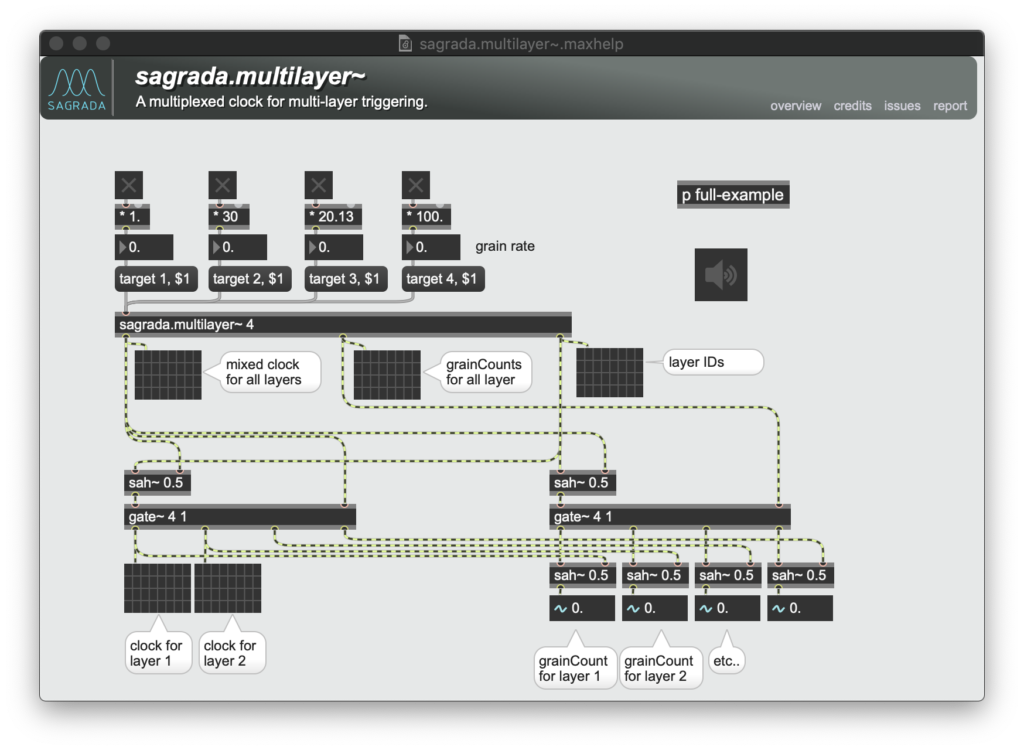

Sagrada — Sample Accurate Granular Synthesis

Sagrada is an open-source Max package performing sample-accurate granular synthesis in a modular way. Grains can be triggered both synchronously and asynchronously. Each grain can have its own effects and eveloppes (for instance the first “attack” and last “release” grains of a grains stream).

You can get it from the Github repository:

https://github.com/vincentgoudard/Sagrada

Sagrada was partly developed during my PhD at LAM. It was inspired by the very good GMU tools developped at GMEM (and its sample-rate triggering) and the FTM package developed at IRCAM (and its modularity). Not to mention all of Curtis Roads’ work on granular synthesis.

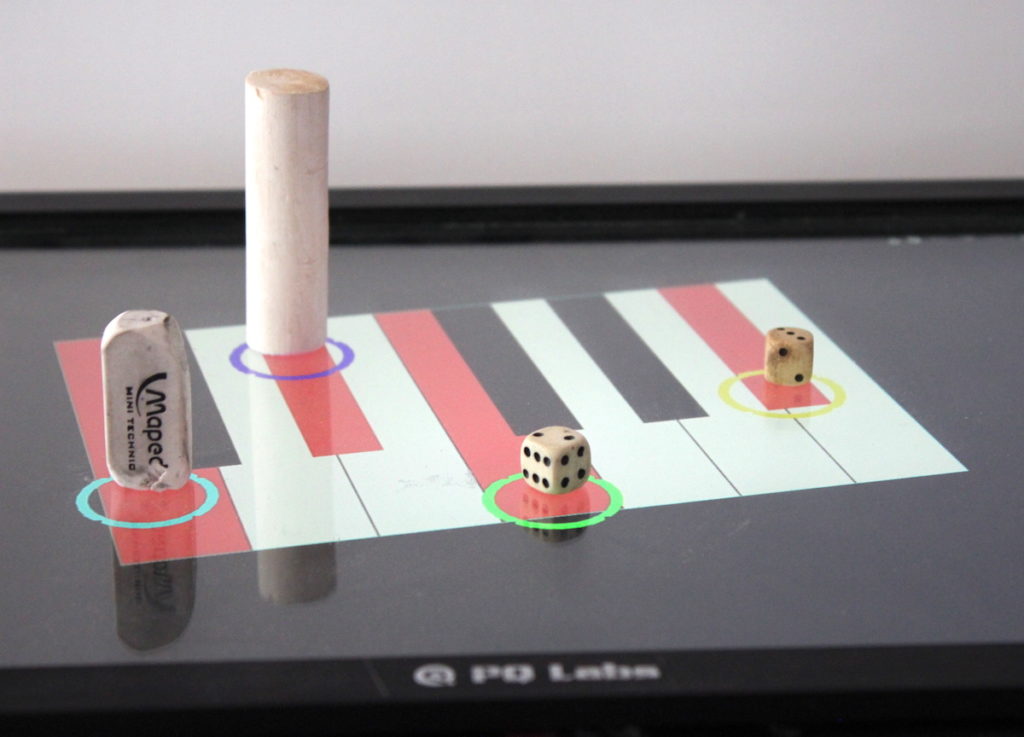

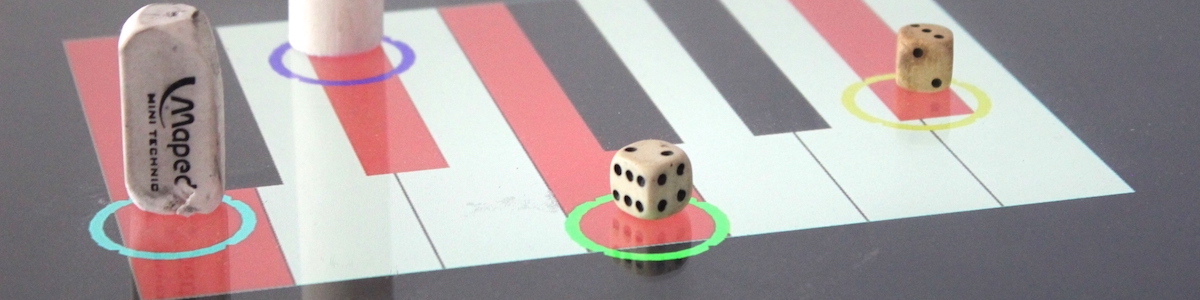

tangible user interfaces @ ICLI, Porto

After presenting “John, the semi-conductor” at the TENOR conference three weeks ago (http://matralab.hexagram.ca/tenor2018), I just arrived in Porto to attend the International Conference on Live Interfaces aka ICLI 2018 (http://www.liveinterfaces.org/) and present some work on tangible user interfaces.

Very happy to be here, the city is beautiful and the program of the conference is pretty exciting!…

Table Sonotactile Interactive

Image ©Anne Maregiano.

The Interactive Sonotactile Table is a device invented for the Maison des Aveugles (“House of the Blinds”) in Lyon by french composer Pascale Criton in collaboration with Hugues Genevois from the Luthery-Acoustics-Music team of the Jean Le Rond d’Alembert Institute and Gérard Uzan, researcher in accessibility. The table was designed by Pierrick Faure (Captain Ludd) in collaboration with Christophe Lebreton (GRAME)

I coded the embedded Arduino boards as well as the Max patch for the gesture/sound interactive design.

The Table Sonotactile Interactive is part of a larger project : La Carte Sonore by Anne Maregiano at the Villa Saint Raphaël: https://www.mda-lacartesonore.com.

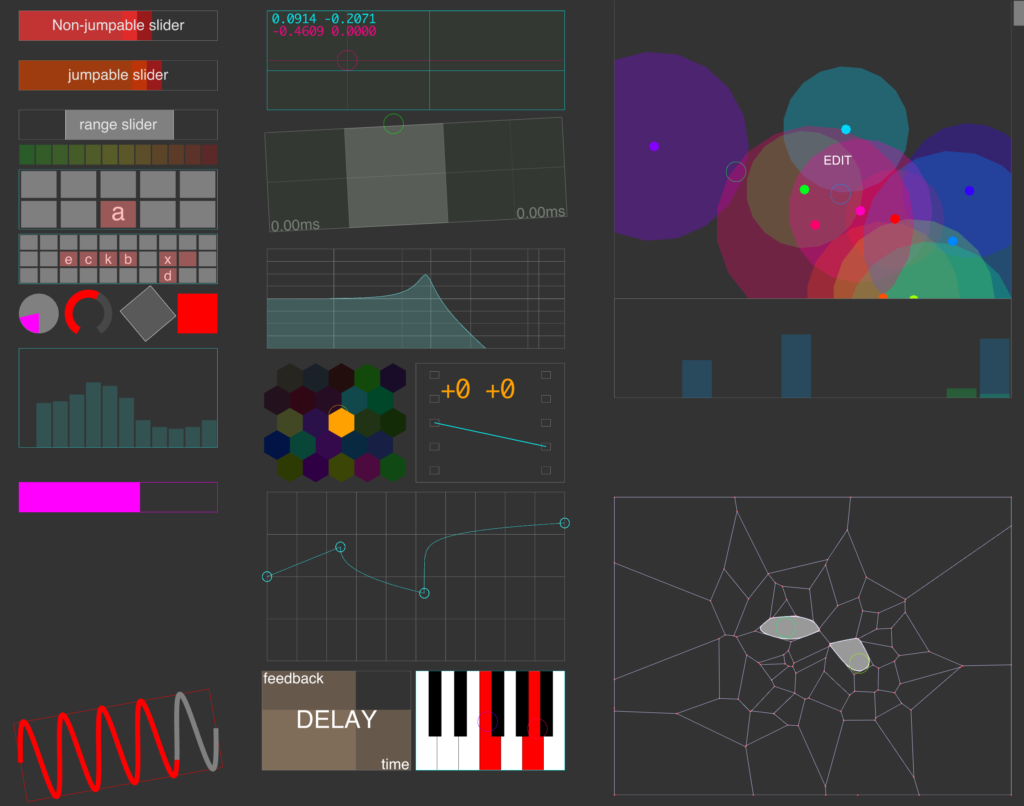

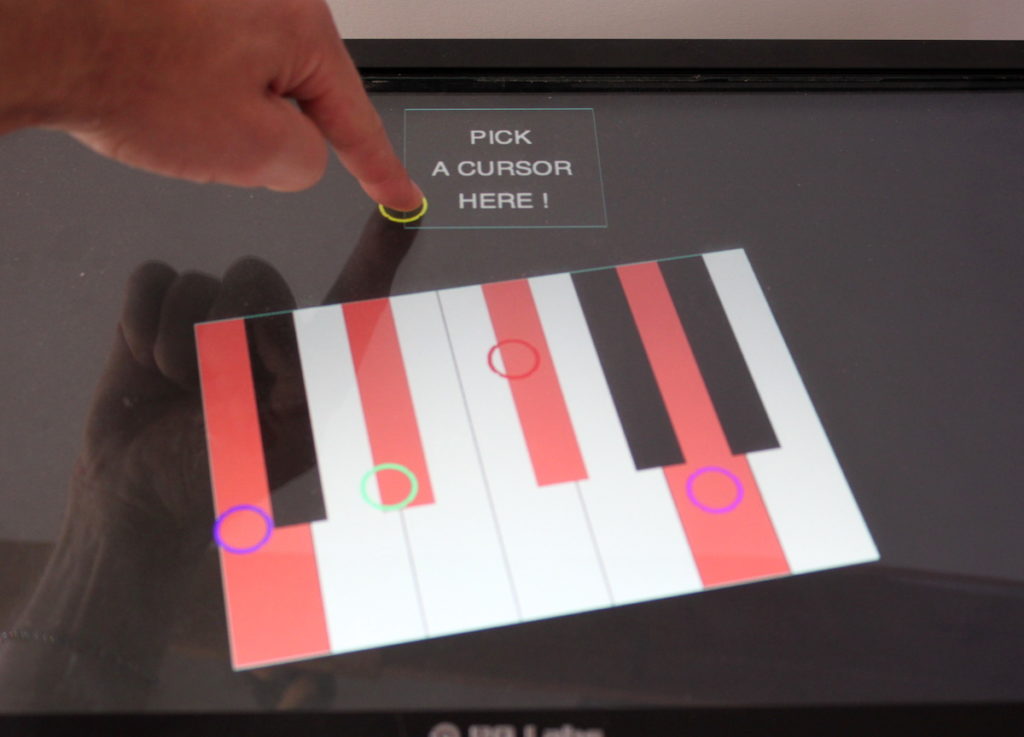

mp.TUI — a Max package for multitouch screen interaction

mp.TUI is a Max package with OpenGL UI components ready for multitouch interaction (using the TUIO protocol).

It was presented at the ICLI’2018 conference in Porto and was used in a series of projects including the Phonetogramme, Xypre and FIB_R.

Sources available on Github : https://github.com/LAM-IJLRA/ModularPolyphony-TUI